We’ve all heard a ton about Google’s RankBrain over the last while, and even yours truly delved into it and into a word vector patent as well. But there’s been something that wasn’t quite complete. Something my geek friends and I have considered as waiting for the other shoe to drop.

You see, from all that we’ve learned, ol’ RankBrain has been stated as something that was being used to better understand queries. And that it’s been most affective in query spaces that are more ambiguous (or unkown queries). To that end, I journeyed down a path that lead me to word vectors, machine learning and neural nets (the AI component, potentially an extension on Google Brain).

But what about the other end of the spectrum? What about the actual indexing of documents?

How Google might use vectors to understand web pages

One thing that I’ve learned is that using vectors is likely the most efficient approach to implement machine learning and neural nets (AI). If this is the case, then we should also be looking at ways that Google might use this approach to index the internet, not merely deal with queries.

To that end, this looks like it might be an interesting approach;

Distributed Representations of Sentences and Documents (PDF)

Google Research

Quoc Le

Tomas Mikolov

Note; both authors worked on Google Brain project

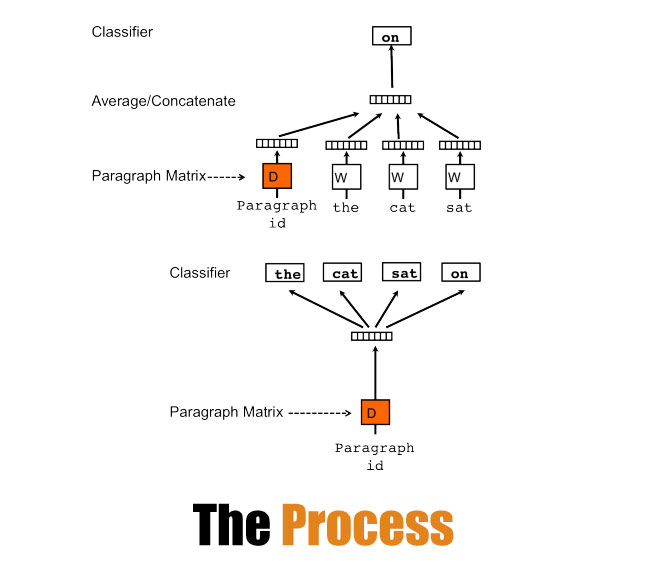

If you’ve read the word vector stuff, you might recall the CBOW (continuous bag of words). In this one they discuss the BOW concept and state that,

“..(the) bag-of-words features have two major weaknesses: they lose the ordering of the words and they also ignore semantics of the words. For example, “powerful,” “strong” and “Paris” are equally distant. In this paper, we propose Paragraph Vector, an unsupervised algorithm that learns fixed-length feature representations from variable-length pieces of texts, such as sentences, paragraphs, and documents.”

They lament that many current approaches in that “strong” and “powerful” should be semantically closer than “Paris”, but aren’t. In short, BOW and bag-of-n-grams don’t really consider semantic relations. This would apparently be where something like Word2Vec type approaches wouldn’t work on the document/sentence/paragraph level.

The approach discussed in this paper, “... show that Paragraph Vectors outperform bag-of-words models” and that they, “… achieve new state-of-the-art results on several text classification and sentiment analysis tasks. “

Keep in mind that last part. While they did look at some indexation and retrieval tasks, much of the research was on sentiment analysis.

Paragraph Vectors

In this paper the Googlers discuss how the name “paragraph vector” is merely to emphasize that the approach is used on anything from a phrase, to a sentence, to an entire document.

As with the other word vector approaches we’ve looked at in the past, this vector approach is also based on a machine learning model, that can predict words in a given paragraph. This prediction approach can help the system better understand context (and semantic relevance).

They also state that, “Our technique is inspired by the recent work in learning vector representations of words using neural networks” – again, which we’ve discussed in context of RankBrain and Word2vec approaches.

And it seems to be working;

“For example, on sentiment analysis task, we achieve new stateof-the-art results, better than complex methods, yielding a relative improvement of more than 16% in terms of error rate. On a text classification task, our method convincingly beats bag-of-words models, giving a relative improvement of about 30%. “

It’s important to note that this paper is from 2014 and was primarily used on sentiment analysis, and some minor indexing and retrieval tasks. Where it’s gone since then? Is anyone’s guess.

Is this part of RankBrain?

Who knows… I don’t. Do you?

The authors of this also worked on the Word2Vec project (and patent) which is seemingly part of that puzzle. They also worked on the Google Brain project, which could also be part of the machine learning and neural net elements of RankBrain.

As such, there are some interesting connections here. But we can never know if some form of this has been implemented, nor if it’s part of what Google considers to be RankBrain. So take that for what you will.

I have wondered about the naming convention; RankBrain. Why they’ve only stated that it’s part of query analysis and classification. Could something like this be the indexing and retrieval element? We may never know.

What does this mean for your SEO efforts?

Yes… it’s sad that I have to go down this road each time I write about it, but we shall.

My general advice remains about the same as when I wrote about RankBrain. We shouldn’t look at these developments (into vector approaches, machine learning and neural nets) as something to be targeted. This is about the evolution of search.

Be relevant – if anything I can see these kinds of approaches helping to improve everything from retrieving more relevant documents, to sentiment analysis (reviews etc), to fighting spam. If you are crafting content that has semantic value and is authoritative, you should be fine (more here). Standard signals (scoring, boosting and dampening) would still be in play

Watch conversational search – I still can’t shake the feeling that all roads lead here. While this particular part of the puzzle wouldn’t be directly connected, it still attaches to query classification, which is part of voice search these days. Consider the vernacular of your target demographic. Write towards that.

Much like patents, we never know where this journey leads. All I am trying to do is give some insight into the evolution. We don’t even know what RankBrain consists of. But ultimately, the vector/machine learning technology, is sure to go beyond mere query analysis and classification.

Take that, for what you will.

Other Works from Tomas Mikolov and Quoc Le;

- Google Scholar listing for Tomas

- Google scholar listing for Quoc

- Classifying Data Objects (patent)

- Computing numeric representations of words in a high-dimensional space (patent)

- Relational similarity measurement (patent)

- Feature-Augmented Neural Networks and Applications of Same (patent)

Some interesting research from Microsoft; Doc2Sent2Vec: A Novel Two-Phase Approach for Learning Document Representation (pdf)